- Print

- DarkLight

- PDF

Ushur AI and ML Model Training Process - Ushur 1.0

The Jump on Phrase in the module allows the Ushur to select a specific path based on an input received from the end-user. It takes information provided by the end user and processes it using a selected AI (Artificial Intelligence) or ML (Machine Learning) model.

The performance of each model is highly dependent on the use case. Some models work best with a large number of training data samples while others may need keywords for better classification.

To use Language Intelligence (LI) feature in the Ushur platform, the “Jump on Phrase” feature should be enabled first.

Enabling the Jump on Phrase Option on a Module

To use the Jump on Phrase, follow these steps:

Select the Ushur that you want to Jump on Phrase to.

Select the Open Response/Li module you want to use the LI feature for.

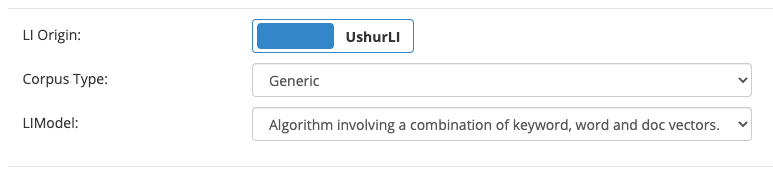

This displays the Open Response dialog box.Select the Jump on Phrase check box.

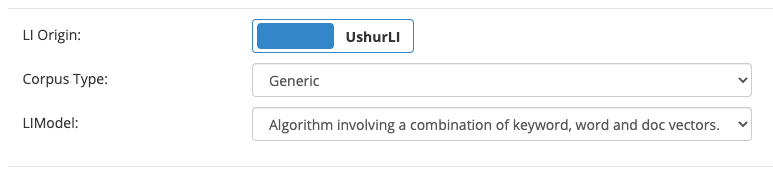

Click the Properties icon.

This displays the LIModel details.

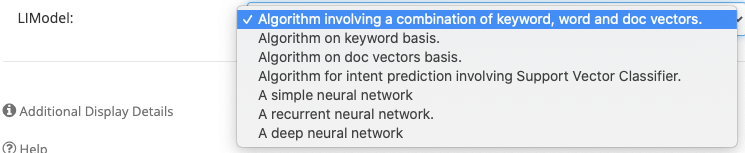

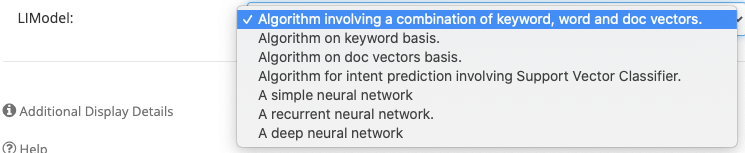

From the LIModel dropdown select the appropriate option that you want to use.

The options include the following:

Algorithm involving a combination of keyword, word and doc vectors: Select this option if you want a normal AI-enabled Ushur.

Algorithm on keyword basis: Select this option if you want to extract word importance and use that as a feature for classification.

Algorithm on doc vectors basis: Select this option if you want to use document vector embedding generated using word vector embedding trained on Wikipedia.

Algorithm for intent prediction involving Support Vector Classifier: Select this option if you want the model to use pre-trained word vector embeddings with the Support Vector Machine classification algorithm.

A simple neural network: Select this option if you want to generate word embeddings based on subword information. This helps it handle spelling mistakes and vocabulary.

A recurrent neural network: Select this option if you want to use the LSTM layer to understand and tune the weights based on both the forward and backward direction of the dataset.

A deep neural network: Select this option if you want a module that leverages transfer learning concepts to adapt them based on the dataset.

Note: For more information, refer to the table in the following section.

Click Save.

Understanding Different LIModule Options

LI Model (AI enabled modules) | Data size per Category (approximate) | Training time | Description |

Algorithm involving a combination of keyword, word and doc vectors. (Ensemble) | At least 15 - 20 samples | Less than 5 Min | This is a model that combines document vector, word vector, and keyword basis model. As all the models are fundamentally different, combining their results reduces the variance improving overall accuracy. The ensemble components are simple, and this achieves better prediction without increased training time and computational overhead. |

Algorithm of keyword basis | At least 15 - 20 samples | Less than 5 Min | This model uses statistical measures to extract word importance and use that as a feature for classification. This does not make use of any pre-training, and because of this, it only has important scores of words in training data, and everything else is ignored. It does not generate and use meaningful representations of words. Therefore, it can result in poor classification accuracy if training data is not representative of the test or work data. |

Algorithm on doc vec basis | At least 15 - 20 samples | Less than 5 Min | This method uses doc vector embedding generated using word vector embedding trained on Wikipedia. This allows text to be encoded with meaningful vector representations. Because of this property, this method can work well with small amounts of data and can handle the vocabulary of Wikipedia data. Also, the order of the words does not matter. This can be a bad thing if your samples have a lot of associations or negations. |

Algorithm for intent prediction using Support vector classifier | More that 50 samples but less than 500 samples | Less than 20 Min | This method uses pre-trained word vector embeddings with the Support Vector Machine classification algorithm. The fundamental difference between this and the other models is that they have a fixed similar function that cannot be adapted as per the requirements. This is problematic when representations are not at the same distance from each other or there is an overlap. SVM tackles this by creating optimal separating hyperplanes between classes and uses that for classification. The downside of this is that it is more computationally intensive than the models above and cannot handle huge datasets. |

A simple neural network (fasttext) | More than 1000 samples | Less than 30 Min | Simple neural network generates word embeddings based on subword information. This helps handle spelling mistakes and vocabulary that is not seen before. It also uses many tricks like negative sampling and hierarchical softmax that make it very efficient in dealing with huge data. This has an option of using pre-trained embedding or training embedding, giving you more modelling flexibility. |

A recurrent neutral network | More than 1000 samples | More than 30 Min | A recurrent neural network that uses the LSTM layer to understand and tune the weights based on both the forward and backward direction of the dataset. This model is pretty good at understanding the language semantics because Ushur does a two-pass on the dataset. It is a fairly accurate model. |

A deep neural network | More than 1000 samples | More than an hour | A deep neural network that leverages transfer learning concepts to adapt based on the dataset. This model has a rich understanding of the syntax and semantics of the language that helps in classifying data accurately. The training time may be longer; however, it gives accurate results when given enough samples. |

Please note that the actual implementation requirement may differ from the guideline provided above depending on the sample data quality, amount of noise in the data, etc. Discuss your needs with the assigned solution consultant prior to implementation.